In order for your blog posts to appear in Google search, your blog is regularly crawled by a Google bot. The bot visits your blog and scans it for new links. It may be that pages are found using Google search that should not be indexed at all (e.g. your legal disclosure).

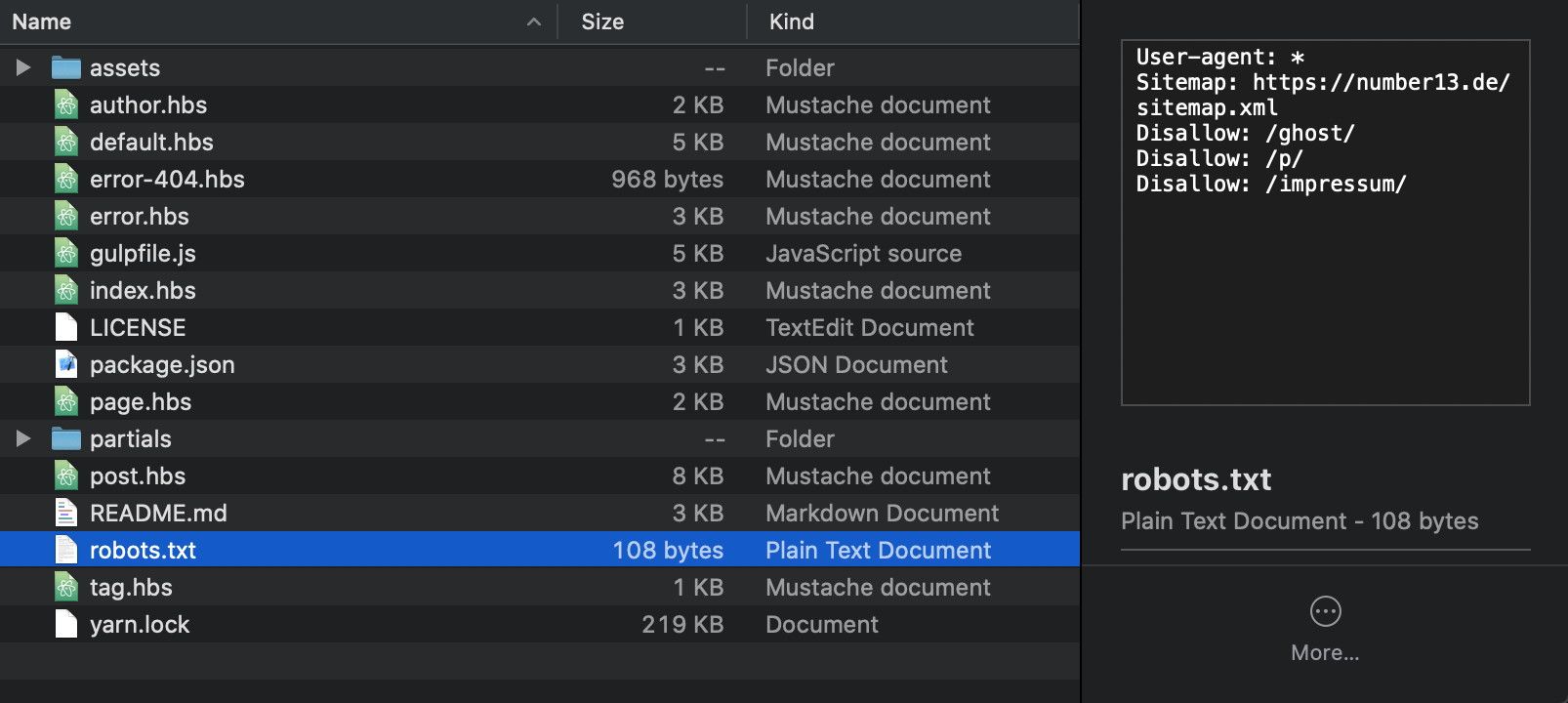

To prevent this, you have to edit the robots.txt file or create a new one. If your theme does not yet contain this file, you can simply create it yourself and move it into the root directory.

The robots.txt file in Ghost blog

If your blog does not contain a custom robots.txt file, Ghost automatically uses the default settings, which we see as follows:

User-agent: *

Sitemap: https://en.number13.de/sitemap.xml

Disallow: /ghost/

Disallow: /p/

Here the relative links /ghost/ and /p/ are already excluded from the Google search. To exclude another page, you just have to take this text and add another line, e.g. Disallow: /legal-disclosure/ (as with us at https://en.number13.de/robots.txt).

As in our example, you simply paste the text file into your theme. Finally, you now have to zip the directory and upload it to your blog again. By visiting https://your-page.de/robots.txt you can check whether the new robots file is actually live.

This means that all pages under Disallow are now excluded from being crawled by the Google Bot. The next time the Google Bot visits your page, it will no longer find these pages, which means that they will disappear from Google search.

Robots Testing Tool to check your robots.txt file

With Google's Robots Testing Tool, you can easily check if you have done everything right. All you have to do is enter any link and start the test. You will immediately be shown whether the specified link is allowed or blocked for the Google Bot.

If you press this button it will load Disqus-Comments. More on Disqus Privacy: Link